pHash does it’s mathematical operations for every pixels for original image size. Therefore, when the image is resized, the result is slightly different depending on image size. My assumption is that if every image is resized to certain size when the image is bigger than the size, the general matching quality would be better.

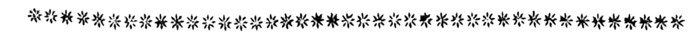

I tested the same set of image samples with previous posting, however, because of the speed, the comparison performed for 3644 images.

To find which size is good for normalization, I resized images to 2000, 1500, and 1000 width. And hamming distance between resized image to from 90% to 10%.

Hamming Distance is bigger than 4

normalization size 2000

| 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | |||

| 100% | 0 | 0 | 0 | 0 | 0 | 1 | 100% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 90% | 0 | 0 | 0 | 0 | 6 | 17 | 90% | 0.00 | 0.00 | 0.00 | 0.00 | 0.08 | 0.23 | |

| 80% | 0 | 0 | 0 | 1 | 12 | 19 | 80% | 0.00 | 0.00 | 0.00 | 0.01 | 0.16 | 0.25 | |

| 70% | 0 | 0 | 0 | 1 | 18 | 36 | 70% | 0.00 | 0.00 | 0.00 | 0.01 | 0.24 | 0.48 | |

| 60% | 0 | 0 | 0 | 12 | 48 | 87 | 60% | 0.00 | 0.00 | 0.00 | 0.16 | 0.64 | 1.16 | |

| 50% | 0 | 0 | 3 | 26 | 77 | 141 | 50% | 0.00 | 0.00 | 0.04 | 0.35 | 1.03 | 1.89 | |

| 40% | 0 | 0 | 9 | 62 | 172 | 272 | 40% | 0.00 | 0.00 | 0.12 | 0.83 | 2.30 | 3.64 | |

| 30% | 1 | 12 | 54 | 156 | 333 | 475 | 30% | 0.01 | 0.16 | 0.72 | 2.09 | 4.45 | 6.35 | |

| 20% | 27 | 99 | 246 | 424 | 693 | 851 | 20% | 0.36 | 1.32 | 3.29 | 5.67 | 9.27 | 11.38 | |

| 10% | 163 | 360 | 753 | 1093 | 1442 | 1636 | 10% | 2.18 | 4.82 | 10.07 | 14.62 | 19.29 | 21.89 |

normalization size 1500

| 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | |||

| 100% | 0 | 0 | 0 | 0 | 0 | 1 | 100% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 90% | 0 | 0 | 0 | 0 | 2 | 13 | 90% | 0.00 | 0.00 | 0.00 | 0.00 | 0.03 | 0.17 | |

| 80% | 0 | 0 | 0 | 0 | 7 | 14 | 80% | 0.00 | 0.00 | 0.00 | 0.00 | 0.09 | 0.19 | |

| 70% | 0 | 0 | 0 | 1 | 15 | 33 | 70% | 0.00 | 0.00 | 0.00 | 0.01 | 0.20 | 0.44 | |

| 60% | 0 | 0 | 0 | 2 | 25 | 64 | 60% | 0.00 | 0.00 | 0.00 | 0.03 | 0.33 | 0.86 | |

| 50% | 0 | 0 | 0 | 7 | 46 | 110 | 50% | 0.00 | 0.00 | 0.00 | 0.09 | 0.62 | 1.47 | |

| 40% | 0 | 0 | 4 | 25 | 123 | 223 | 40% | 0.00 | 0.00 | 0.05 | 0.33 | 1.65 | 2.98 | |

| 30% | 0 | 0 | 18 | 86 | 247 | 389 | 30% | 0.00 | 0.00 | 0.24 | 1.15 | 3.30 | 5.20 | |

| 20% | 6 | 27 | 116 | 257 | 520 | 678 | 20% | 0.08 | 0.36 | 1.55 | 3.44 | 6.96 | 9.07 | |

| 10% | 137 | 308 | 654 | 969 | 1313 | 1507 | 10% | 1.83 | 4.12 | 8.75 | 12.96 | 17.57 | 20.16 |

normalization size 1000

| 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | |||

| 100% | 0 | 0 | 0 | 0 | 0 | 1 | 100% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 90% | 0 | 0 | 0 | 0 | 0 | 11 | 90% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.15 | |

| 80% | 0 | 0 | 0 | 0 | 0 | 7 | 80% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.09 | |

| 70% | 0 | 0 | 0 | 0 | 5 | 23 | 70% | 0.00 | 0.00 | 0.00 | 0.00 | 0.07 | 0.31 | |

| 60% | 0 | 0 | 0 | 0 | 6 | 45 | 60% | 0.00 | 0.00 | 0.00 | 0.00 | 0.08 | 0.60 | |

| 50% | 0 | 0 | 0 | 0 | 26 | 90 | 50% | 0.00 | 0.00 | 0.00 | 0.00 | 0.35 | 1.20 | |

| 40% | 0 | 0 | 0 | 3 | 56 | 156 | 40% | 0.00 | 0.00 | 0.00 | 0.04 | 0.75 | 2.09 | |

| 30% | 0 | 0 | 2 | 17 | 132 | 274 | 30% | 0.00 | 0.00 | 0.03 | 0.23 | 1.77 | 3.67 | |

| 20% | 0 | 4 | 39 | 122 | 354 | 512 | 20% | 0.00 | 0.05 | 0.52 | 1.63 | 4.74 | 6.85 | |

| 10% | 61 | 161 | 406 | 679 | 999 | 1193 | 10% | 0.82 | 2.15 | 5.43 | 9.08 | 13.36 | 15.96 |

Hamming Distance is bigger than 6

normalization size 2000

| 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | |||

| 100% | 0 | 0 | 0 | 0 | 0 | 0 | 100% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| 90% | 0 | 0 | 0 | 0 | 0 | 1 | 90% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 80% | 0 | 0 | 0 | 1 | 2 | 3 | 80% | 0.00 | 0.00 | 0.00 | 0.01 | 0.03 | 0.04 | |

| 70% | 0 | 0 | 0 | 0 | 4 | 11 | 70% | 0.00 | 0.00 | 0.00 | 0.00 | 0.05 | 0.15 | |

| 60% | 0 | 0 | 0 | 0 | 8 | 21 | 60% | 0.00 | 0.00 | 0.00 | 0.00 | 0.11 | 0.28 | |

| 50% | 0 | 0 | 0 | 6 | 20 | 46 | 50% | 0.00 | 0.00 | 0.00 | 0.08 | 0.27 | 0.62 | |

| 40% | 0 | 0 | 4 | 21 | 46 | 94 | 40% | 0.00 | 0.00 | 0.05 | 0.28 | 0.62 | 1.26 | |

| 30% | 0 | 0 | 11 | 45 | 106 | 175 | 30% | 0.00 | 0.00 | 0.15 | 0.60 | 1.42 | 2.34 | |

| 20% | 4 | 14 | 63 | 142 | 286 | 381 | 20% | 0.05 | 0.19 | 0.84 | 1.90 | 3.83 | 5.10 | |

| 10% | 59 | 153 | 347 | 539 | 752 | 869 | 10% | 0.79 | 2.05 | 4.64 | 7.21 | 10.06 | 11.63 |

normalization size 1500

| 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | |||

| 100% | 0 | 0 | 0 | 0 | 0 | 0 | 100% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| 90% | 0 | 0 | 0 | 0 | 0 | 1 | 90% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 80% | 0 | 0 | 0 | 0 | 0 | 1 | 80% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 70% | 0 | 0 | 0 | 0 | 2 | 9 | 70% | 0.00 | 0.00 | 0.00 | 0.00 | 0.03 | 0.12 | |

| 60% | 0 | 0 | 0 | 0 | 6 | 19 | 60% | 0.00 | 0.00 | 0.00 | 0.00 | 0.08 | 0.25 | |

| 50% | 0 | 0 | 0 | 1 | 10 | 36 | 50% | 0.00 | 0.00 | 0.00 | 0.01 | 0.13 | 0.48 | |

| 40% | 0 | 0 | 0 | 8 | 28 | 76 | 40% | 0.00 | 0.00 | 0.00 | 0.11 | 0.37 | 1.02 | |

| 30% | 0 | 0 | 3 | 26 | 81 | 150 | 30% | 0.00 | 0.00 | 0.04 | 0.35 | 1.08 | 2.01 | |

| 20% | 1 | 4 | 30 | 88 | 221 | 316 | 20% | 0.01 | 0.05 | 0.40 | 1.18 | 2.96 | 4.23 | |

| 10% | 39 | 99 | 257 | 433 | 639 | 756 | 10% | 0.52 | 1.32 | 3.44 | 5.79 | 8.55 | 10.11 |

normalization size 1000

| 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | 5000 < | 4000 < | 3000 < | 2000 < | 1000 < | < 1000 | |||

| 100% | 0 | 0 | 0 | 0 | 0 | 0 | 100% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| 90% | 0 | 0 | 0 | 0 | 0 | 1 | 90% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 80% | 0 | 0 | 0 | 0 | 0 | 1 | 80% | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | |

| 70% | 0 | 0 | 0 | 0 | 1 | 8 | 70% | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | 0.11 | |

| 60% | 0 | 0 | 0 | 0 | 2 | 15 | 60% | 0.00 | 0.00 | 0.00 | 0.00 | 0.03 | 0.20 | |

| 50% | 0 | 0 | 0 | 0 | 9 | 35 | 50% | 0.00 | 0.00 | 0.00 | 0.00 | 0.12 | 0.47 | |

| 40% | 0 | 0 | 0 | 0 | 14 | 62 | 40% | 0.00 | 0.00 | 0.00 | 0.00 | 0.19 | 0.83 | |

| 30% | 0 | 0 | 0 | 4 | 35 | 104 | 30% | 0.00 | 0.00 | 0.00 | 0.05 | 0.47 | 1.39 | |

| 20% | 0 | 0 | 11 | 39 | 138 | 233 | 20% | 0.00 | 0.00 | 0.15 | 0.52 | 1.85 | 3.12 | |

| 10% | 14 | 38 | 135 | 270 | 449 | 566 | 10% | 0.19 | 0.51 | 1.81 | 3.61 | 6.01 | 7.57 |

Conclusion

According to the test result, in terms of matching percentage, resizing before hashing gives better results; this can be a solution for better matching. However, false positive matching percentage is important.